Introduction

Welcome to Patina - a pure Rust project dedicated to evolving and modernizing UEFI firmware with a strong focus on security, performance, and reliability. This book serves as high-level documentation for developers and platform owners working with or contributing to Patina.

To put it simply, Patina is a UEFI spec compliant pure Rust DXE Core with an interface for writing monolithically compiled Patina components (drivers) using a dependency injection model. It should be noted that Patina takes a more opinionated stance on some implementation details to provide a more secure runtime, so we are not fully PI spec compliant. Due to this, there are some key platform requirements that differ from a non-Patina implementation. See Patina Requirements for more information regarding this.

This book is the "catch-all" for Patina: it contains various documentation regarding Patina that can be broken up into these 5 categories:

- Background of Patina and its goals.

- Integrating the Patina code into your platform.

- Developing Patina components.

- Contributing to Patina.

- Development guidance and standards that apply to both Patina and Patina component developers.

If you have not read this book before, we suggest you start with (1) Patina Background, which outlines the project's goals and design philosophy. You can also watch an overview of Patina presented at UEFI Plugfest 2025.

Patina In Action

If you want to see Patina being actively used and "in action", then check out these two repositories:

1. patina-qemu: This repository has two virtual platforms, both of which use the patina-dxe-core. You can find setup instructions for building and running them locally in that repository's readme.

2. patina-dxe-core-qemu: This repository contains

the patina-dxe-core binary crates used by the two virtual platforms in patina-qemu. If you'd like to experiment,

you can clone this repo, modify the DXE core, and use the override feature in patina-qemu to boot with your

customized build.

Important Crates and Repositories

The Patina project maintains a large list of distinct crates throughout our various repositories (See below) in order to support proper decoupling and faster build times. If you are just starting with Patina, there are two main crates that you should review:

1. patina_dxe_core: The library crate containing the Patina DXE Core struct definition. This struct is instantiated and configured by a platform in their own respective binary crate, which ultimately compiles to an EFI binary.

2. patina: The SDK for working with Patina, whether you are creating a Patina component or developing the Patina DXE core.

Putting it all together

The patina-dxe-core crate provides the UEFI spec compliant Patina DXE Core. The Core produces the EFI System Table (with the core provided services) and a PI spec compliant* DXE Dispatcher.

Outside of UEFI spec compliance, platforms can attach Patina Components, which are conceptually similar to UEFI components, but are pure Rust and monolithically compiled with the Patina DXE Core. Some components are written and maintained by Patina, but are still optional. Over time, the Patina project will add more generically useful components. We are expecting that additional components will be written by platform maintainers. These may be specific to their platform or generic for the Patina ecosystem. See Patina Component Model for more information.

flowchart TD

subgraph internal_crates["Internal Crates (1..N)"]

direction TB

internal2["Crate"]

internalN["Crate"]

end

subgraph plugin_crates["Component Crates (1..N)"]

direction TB

plugin2["Component"]

pluginN["Component"]

end

consumer_bin["Platform Patina DXE Core (binary)"] -- uses --> patina_core["patina_dxe_core"]

consumer_bin -. optionally uses .-> plugin_crates

patina_core -- uses --> internal_crates

patina_core -. depends on .-> patina_sdk["patina (SDK crate)"]

plugin2 -. depends on .-> patina_sdk

pluginN -. depends on .-> patina_sdk

internal2 -. depends on .-> patina_sdk

internalN -. depends on .-> patina_sdk

All Repositories

| Repository | Description |

|---|---|

| patina | Core codebase for Patina containing Patina-only crates. |

| patina-readiness-tool | A DXE core replacement binary that reviews HOBs passed from PEI to DXE to validate your platform is ready to use the Patina DXE Core. |

| patina-fw-patcher | A developer tool to quickly patch a new patina-dxe-core binary into a platform's firmware |

| patina-paging | A paging solution we believe is generic enough to be useful outside of Patina. |

| patina-mtrr | A x86_64 MTRR solution we believe is generic enough to be useful outside of Patina. |

| patina-edk2 | A collection of definitions and helper utilities designed for EDK II-style C firmware projects that integrate Patina. |

| patina-dxe-core-qemu | A reference implementation of patina-dxe-core for our two virtual QEMU platforms. |

| patina-qemu | Two reference virtual platforms (Q35, SBSA) |

| patina-devops | A repository containing github workflows, actions, and notebooks used across our repositories. |

Final Notes

This documentation aims to be as detailed as possible, not assuming any previous knowledge. However some general Rust knowledge is beneficial throughout the book, and some EDK II knowledge is beneficial to understanding how consume the final pure-Rust platform Patina DXE core in EDK II style firmware.

While you can navigate to any section in this book via the left-side navigation bar, here are the quick links to the start of the different sections mentioned above:

- Patina Background

- Platform Integration

- Component Development

- Contributing to Patina

- Developer Guides

Patina Overview at UEFI Plugfest 2025

Patina Background

Overview

Firmware and UEFI firmware in particular has long been written in C. Firmware operates in a unique environment compared to other system software. It is written to bootstrap a system often at the host CPU reset vector and as part of a chain of trust established by a hardware rooted immutable root of trust. Modern PC and server firmware is extraordinarily complex with little room for error.

We call the effort to evolve and modernize UEFI firmware in the Open Device Partnership (ODP) project "Patina". The remainder of this document will discuss the motivation for this effort, a high-level overview of the current state of Patina, and the current state of Rust in UEFI firmware.

Firmware Evolution

From a functional perspective, firmware must initialize the operating environment of a device. To do so involves integrating vendor code for dedicated microcontrollers, security engines, individual peripherals, System-on-Chip (SOC) initialization, and so on. Individual firmware blobs may be located on a number of non-volatile media with very limited capacity. The firmware must perform its functional tasks successfully or risk difficult to diagnose errors in higher levels of the software stack that may impede overall device usability and debuggability.

These properties have led to slow but incremental expansion of host firmware advancements over time.

Firmware Security

From a security perspective, firmware is an important component in the overall system Trusted Computing Base (TCB). Fundamental security features taken for granted in later system software such as kernels and hypervisors are often based on secure establishment in a lower layer of firmware. At the root is a concept of "trust".

While operating systems are attractive targets due to their ubiquity across devices and scale, attackers are beginning to shift more focus to firmware as an attack surface in response to increasingly effective security measures being applied in modern operating systems. Securing the early boot process revolves around key inflection points and protections applied between those points. The earliest point is the device "root of trust", where the system needs to ensure it begins operating in a trusted state. This is often performed by code in immutable Read-Only Memory ROM located in a SOC. Since size is extremely limited, this logic typically hands off quickly to code of larger size on some mutable storage such as SPI flash that is first verified by a key stored in the SOC. In general, this handoff process continues throughout the boot process as hardware capabilities come online enabling larger and more complex code to be loaded forming what is referred to as a "chain of trust". Eventually some code must execute on the host CPU, that code is often UEFI based firmware. While significant research has been devoted across the entire boot process, UEFI firmware on the host CPU presents a unique opportunity to gain more visibility into early code execution details and intercept the boot process before essential activities take place such as application of important security register locks, cache/memory/DMA protections, isolated memory regions, etc. The result is code executed in this timeframe must carry forward proper verification and measurement of future code while also ensuring it does not introduce a vulnerability in its own execution.

Performant and Reliable

From a performance perspective, firmware code is often expected to execute exceedingly fast. The ultimate goal is for an end user to not even be aware such code is present. In a consumer device scenario, a user expects to press a power button and immediately receive confirmation their system is working properly. At the minimum, a logo is often shown to assure the user something happened and they will be able to interact with the system soon. In a server scenario, fleet uptime is paramount. Poorly written firmware can lead to long boot times that impact virtual machine responsiveness and workload scaling or, even worse, Denial of Service if the system fails to boot entirely. In an embedded scenario, government regulations may require firmware to execute fast enough to show a backup camera within a fixed amount of time.

All of this is to illustrate that firmware must perform important work in a diverse set of hardware states with code that is as small as possible and do so quickly and securely. In order to transition implementation spanning millions of lines of code written in a language developed over 50 years ago requires a unique and compelling alternative.

Rust and Firmware

As previously stated, modern systems necessitate a powerful language that can support low-level programming with maximum performance, reliability, and safety. While C has provided the flexibility needed to implement relatively efficient firmware code, it has failed to prevent recurring problems around memory safety.

To get a better idea of the memory safety challenges in firmware written in C and to see real-world examples of where this has been a problem, refer to:

- Patina DXE Core Memory Strategy

- Real World Case Study: UEFI Memory Safety Issues Preventable by Rust

Stringent Safety

Common pitfalls in C such as null pointer dereferences, buffer and stack overflows, and pointer mismanagement continue to be at the root of high impact firmware vulnerabilities. These issues are especially impactful if they compromise the system TCB. Rust is compelling for UEFI firmware development because it is designed around strong memory safety without the usual overhead of a garbage collector. In addition, it enforces stringent type safety and concurrency rules that prevent the types of issues that often lead to subtle bugs in low-level software development.

Languages aside, UEFI firmware has greatly fallen behind other system software in its adoption of basic memory vulnerability mitigation techniques. For example, data execution protection, heap and stack guards, stack cookies, and null pointer dereference detection is not present in the vast majority of UEFI firmware today. More advanced (but long time) techniques such as Address Space Layout Randomization (ASLR), forward-edge control flow integrity technologies such as x86 Control Flow Enforcement (CET) Indirect Branch Tracking (IBT) or Arm Branch Target Identification (BTI) instructions, structured exception handling, and similar technologies are completely absent in most UEFI firmware today. This of course exacerbates errors commonly made as a result of poor language safety.

Given firmware code also runs in contexts with high privilege level such as System Management Mode (SMM) in x86, implementation errors can be elevated by attackers to gain further control over the system and subvert other protections.

Developer Productivity

The Rust ecosystem brings more than just safety. As a modern language firmware development can now participate in concepts and communities typically closed to firmware developers. For example:

-

Higher level multi-paradigm programming concepts such as those borrowed from functional programming in addition to productive polymorphism features such as generics and traits.

-

Safety guarantees that prevent errors and reduce the need for a myriad of static analysis tools with flexibility to still work around restrictions when needed in an organized and well understood way (unsafe code).

Modern Tooling

Rust includes a modern toolchain that is well integrated with the language and ecosystem. This standardizes tooling fragmented across vendors today and lends more time to firmware development. Examples of tools and community support:

-

An official package management system with useful tools such as first-class formatters and linters that reduce project-specific implementations and focus discussion on functional code changes.

-

High quality reusable bundles of code in the form of crates that increase development velocity and engagement with other domain experts.

-

Useful compilation messages and excellent documentation that can assist during code development.

-

A modern testing framework that allows for unit, integration, and on-platform tests to be written in a consistent way. Code coverage tools that are readily available and integrate seamlessly with modern IDEs.

Rust's interoperability with C code is also useful. This enables a phased adoption pathway where codebases can start incorporating Rust while still relying upon its extensive pre-existing code. At the same time, Rust has been conscious of low-level needs and can precisely structure data for C compatibility.

Patina in ODP

The Patina team in ODP plans to participate within the open Rust development community by:

- Engaging with the broader Rust community to learn best practices and share low-level system programming knowledge.

- Leveraging and contributing back to popular crates and publishing new crates that may be useful to other projects.

- A general design strategy is to solve common problems in a generic crate that can be shared and then integrate it back into firmware.

- Collaborating with other firmware vendors and the UEFI Forum to share knowledge and best practices and incorporate elements of memory safety languages like Rust into industry standard specifications where appropriate. Some specifications have interfaces defined around concepts and practices common in unsafe languages that could be improved for safety and reliability.

Looking forward, we're continuing to expand the coverage of our firmware code written in Rust. We are excited to continue learning more about Rust in collaboration with the community and our partners.

Current State

We began our journey with Rust in UEFI firmware by adding support for building Rust code in the edk2 build system used for C code. We still have this support and it worked well for integrating smaller pieces of Rust code into the larger, conventional C codebase. We wrote a few EFI modules with this approach including USB and HID DXE drivers written in Rust.

However, to truly realize our vision of the benefits gained from Rust in firmware, we needed to shift our primary work environment to a pure Rust workspace. Further, we chose to build an entire execution environment from the ground up in pure Rust. When surveying what environment this should be, we noted that PEI is very divergent across architectures and silicon vendors, while DXE operates in a more standardized environment with well defined entry criteria and an overall larger share of functionality and drivers. This led to writing a DXE Core entirely in Rust.

In the course of developing the Patina DXE Core, supporting functionality was needed that led to the some new crates being spun off from the work that will be published individually for reuse in other core environments or drivers. All of this work is part of the Patina project.

Right now, those include:

- An "advanced logger" crate for UEFI debug output.

- A Platform Initialization (PI) crate that provides a Rust interface and implemention for the UEFI PI specification.

- A Rust UEFI SDK crate that contains Rust implementation of common interfaces and code needed in both UEFI drivers and core environments like the Patina DXE Core.

- A generic paging crate that implements the functionality needed to manage page tables and memory mappings in x86/64 and AArch64 environments.

- A generic Memory Type Range Register (MTRR) crate that implements the functionality needed to manage memory type ranges in x86/64 environments.

Patina DXE Core

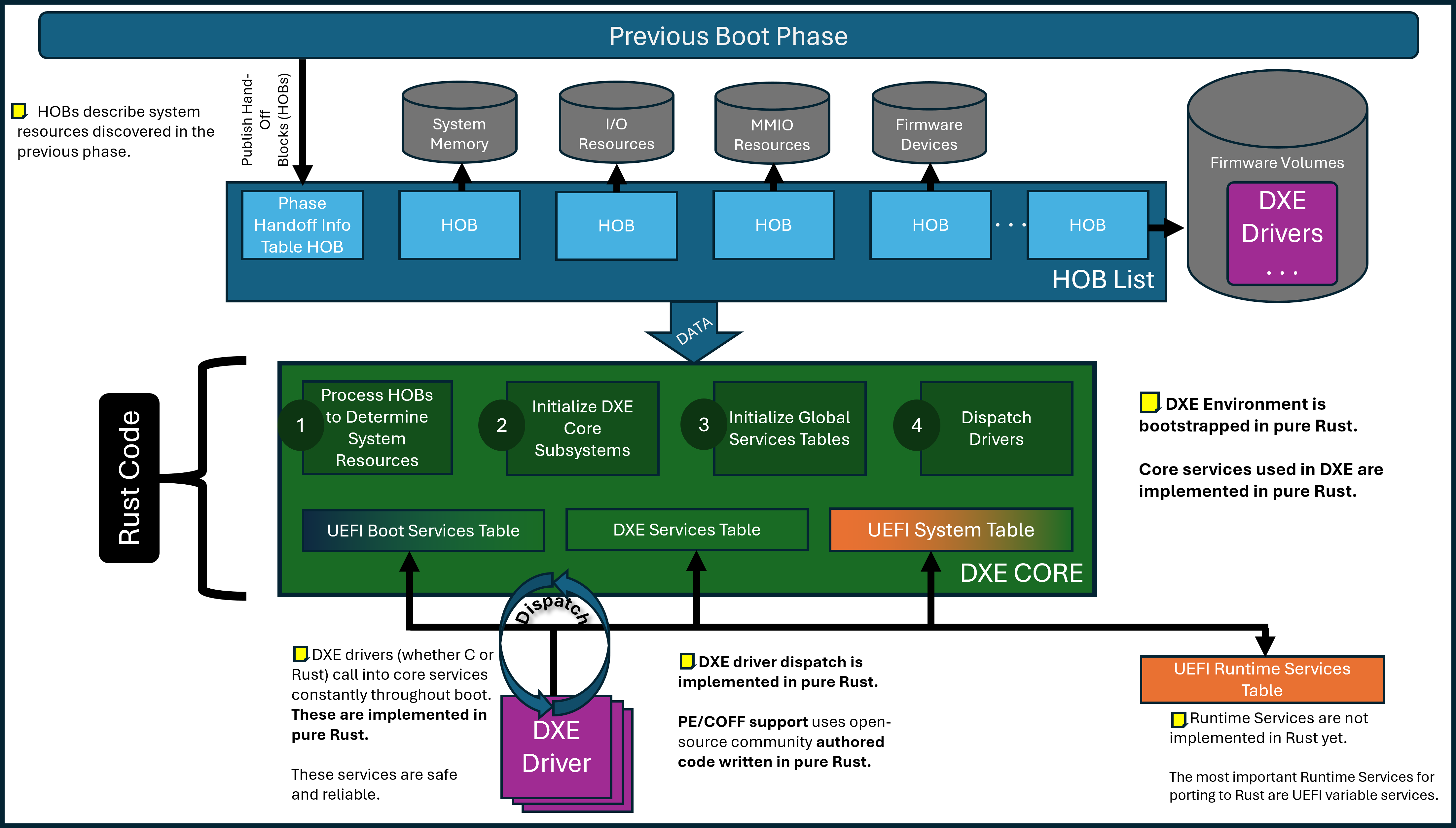

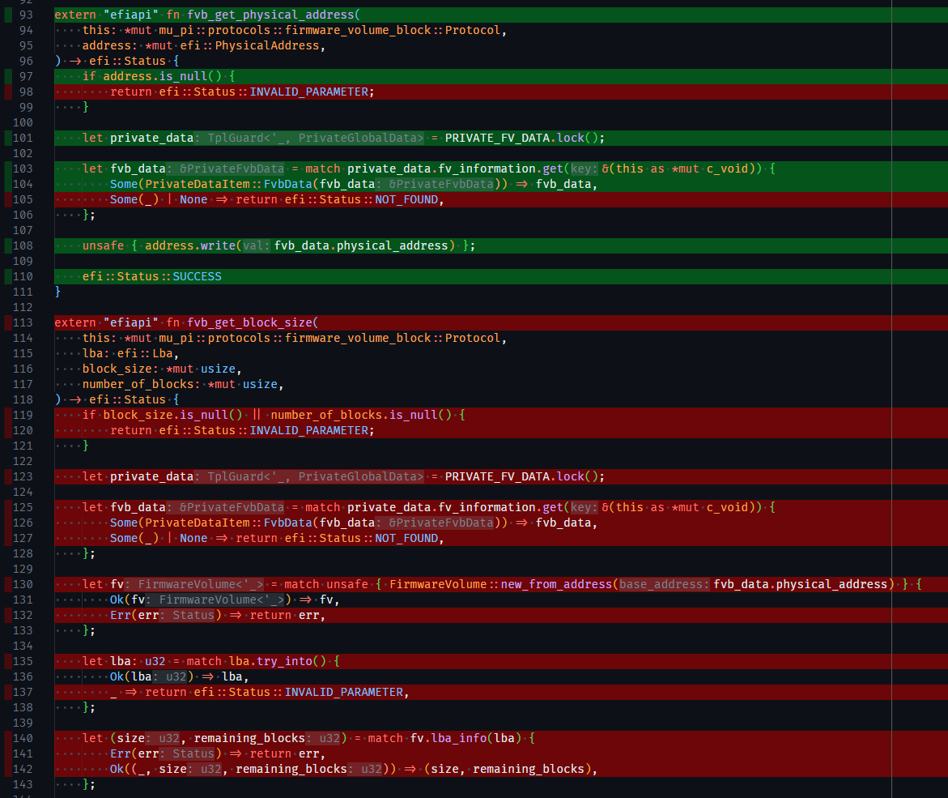

In the above high-level diagram, the Patina DXE Core takes system data input in the form of HOBs in the same way as the C DXE Core. The green box indicates that the core is written in Rust, while purple indicates that DXE drivers may be written either in C or Rust. Orange indicates code that is still written in C. For example, the UEFI Boot Services table and services themselves are largely written in pure Rust. The UEFI Runtime Services table itself has a Rust definition but many of the services are still implemented in C so it is orange.

Notable DXE Core Features

- AARCH64 and x86/64 support.

- Support for QEMU (Q35 and SBSA).

- Tested and developed on physical Intel and Arm hardware.

- Boots to Windows and Linux on these platforms.

- Performance record (FBPT) support.

- Page table management.

- A pure Rust dispatch system in addition to support for PI compatible FV/FFS dispatch.

- Parity with the C DXE Core in UEFI Self-Certification Test (SCT) results.

- ~70% unit test coverage in the Patina DXE Core (with a goal of >80% coverage).

- Support for Enhanced Memory Protections.

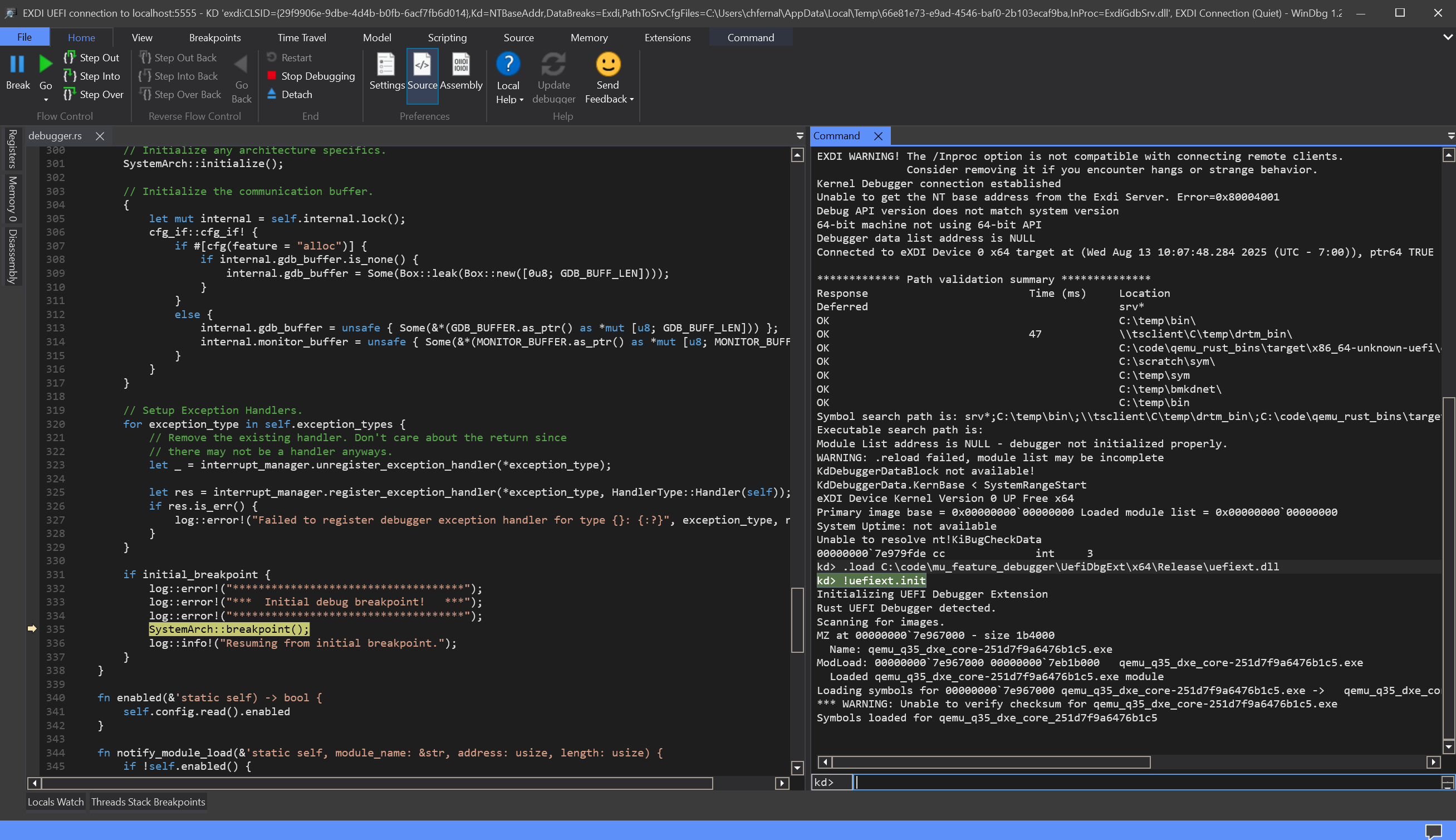

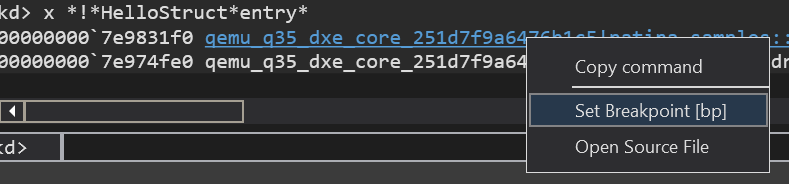

- Source-level debugging support.

- Built-in Brotli and EFI decompression support.

- Infrastructure (in the

patina::testmodule) for on-platform execution of unit tests.

The Patina DXE Core otherwise supports the normal responsibilities of a DXE Core except for the design restrictions described in the "Compatibility" section.

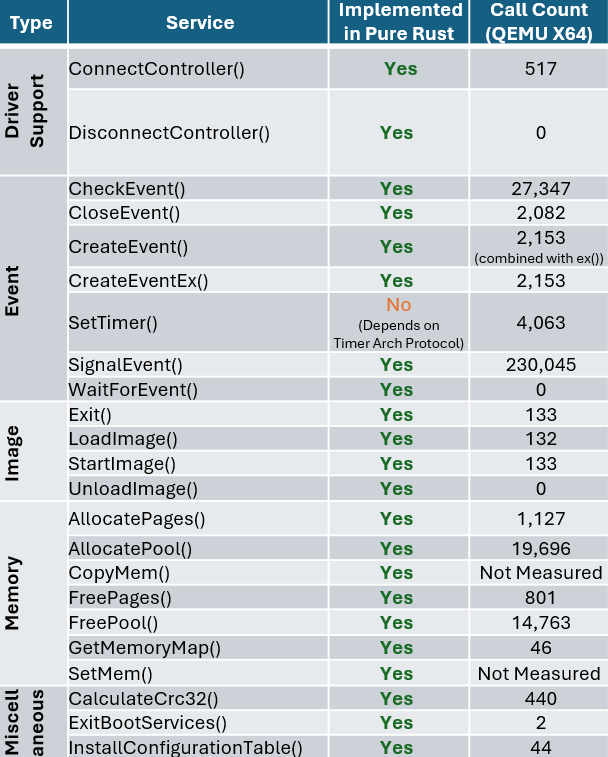

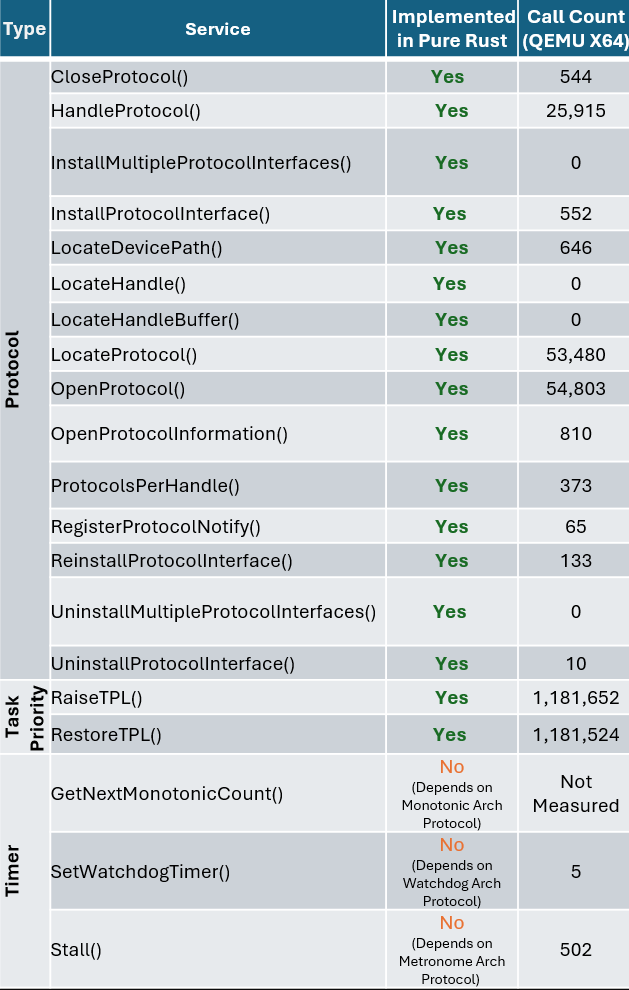

To illustrate why we believe the DXE Core is an ideal starting point, the following table summarizes the number of calls into boot services which are implemented in the DXE Core on a Q35 QEMU platform for reference. This is meant to show that while DXE drivers written in C are still dispatched and used during boot, the core services invoked and depended on by those drivers are written in Rust.

|  |

|---|

Rust DXE Scaling Plan

While the Patina DXE Core is mostly a drop-in replacement for the C DXE Core, it does differ in terms of design to accommodate the Rust language, its safety guarantees, and more modern software practices that contribute to higher quality interfaces and testing.

While more detailed design documents will be available in the Patina DXE Core codebase, a key design goal to call out now is support to transition to a larger share of Rust code in DXE. To best take advantage of Rust's static safety guarantees and to avoid the need for unsafe code in interfacing between components (e.g. protocol database), we have implemented the ability for the Patina DXE Core dispatch process to dispatch platform defined static components called "components". Components are selected for dispatch by the platform and can share data and services with each other but through Rust interfaces that are safe and statically checked versus the dynamic and disjoint nature of the protocol database in the C DXE Core.

For an example of how the Patina DXE Core is instantiated and customized in a platform binary, see the Patina DXE Core Integration Guide.

Rust is an exciting new next step and there is more to share about the Patina DXE Core in future documentation.

Integration

This section is not meant to be a comprehensive guide to integrating Rust into UEFI firmware and more detailed information is available. This section is meant to share a high-level sense of how the Patina DXE Core is integrated into a platform.

The following integration documents might be helpful if you're beginning to work with the Patina DXE Core:

patina_dxe_core as a Library Crate

The Patina DXE Core itself is a library crate. This means a single set of common DXE Core is provided that can be linked into a binary crate. The binary crate is owned by the platform. The purpose of this separation is to allow the DXE Core to be reused across multiple platforms and to allow the platform to provide the necessary configuration and platform specific code to the DXE Core when it uses the DXE Core interface. The binary crate built by the platform is what produces the .efi DXE Core binary.

This separation also means that a Patina DXE Core can simply be swapped with the C DXE Core in an existing platform. The Patina DXE Core .efi file produced by the pure Rust platform binary crate can be placed into the flash map of the firmware volume that contains the DXE Core.

Platform Customization

The platform binary crate is where platform-specific customization is done. For example, the Patina DXE Core depends on a UART. However, the platform can configure the UART passed to the DXE Core to be either an I/O or MMIO UART and configure the UART base address, baud rate, stride size, and other parameters. The platform can specify pure Rust components to dispatch in the Patina DXE Core as well.

Transition Tooling

We plan to provide a "DXE Readiness" tool that will help test the input data (e.g. HOBs) and other system state to determine any compatibility issues and provide guidance where possible. We're hoping this will make the Patina DXE Core onboarding experience easier but also provide more visibility into the DXE Core's requirements and operating state in general.

Testing

Three main types of testing are currently supported.

- Unit tests are written in the exact file that you are working in. Tests are written in a conditionally compiled

sub-module and any tests should be tagged with

#[test]. - Integration tests are very similar to unit testing, however, the developer does not have access to the internal state of the module. Only the external interfaces are being tested. Cargo will detect and run these tests with the same command as for unit tests. More information about integration tests are available in the cargo book entry.

- On-platform tests are supported with code in a module called

patina::testthat provides a testing framework similar to the typical rust testing framework. The key difference is that instead of tests being collected and executed on the host system, they are instead collected and executed via a component (patina::test::TestRunner) provided by the same crate. The platform must register this component with theDXE core. The DXE core will then dispatch this component, which will run all registered tests.

Compatibility

The Patina DXE Core is not only written in a newer, safer language but it is also designed for modern, more secure software practices. This means not everything that worked in the C DXE Core will work in the Patina DXE Core.

The main areas at this time that are not supported are:

- A priori dispatch of drivers.

- Traditional SMM support.

- "Dual Mode" drivers. For example, a driver that can run in both PEI and DXE (rarely used).

The Patina DXE Core also sets up memory protections and requires a more accurately and comprehensively defined memory map. The platform will likely need to describe more resources than before (via resource descriptor HOBs) so pages can be mapped correctly and UEFI code that violates memory protections will need to be fixed. For example, null pointer dereference detection and stack guard are active so code (C DXE driver or a third-party option ROM) will have memory protection violations caught at runtime.

For more details about mememory management in Patina see Memory Management.

Performance

While Rust provides some drawbacks when compared to C (e.g.

binary size),

it is generally the most performant of the memory-safe languages and adds comparatively minimal overhead. It is a more

feature-rich language; for example, exposing complex data structures like HashMaps, Vectors, iterators (compare to the

simple linked lists used in C). Patina uses these abstractions to provide more robust implementations of core

functionality and enables core CPU capabilities differently than standard C firmware; as such, simple wall clock timing

is not a fully accurate performance comparison. However, as a baseline measurement, with Patina v16.0 running on Intel

x64 hardware, the Rust DXE core executes approximately 10% slower than the C core when timed across identical

checkpoints (with magnitude in tens of ms).

The Request For Comments (RFC) Lifecycle

Typically small to medium changes such as bugfixes, existing implementation improvements, and documentation are handled via pull requests. Depending on the size they may also have an associated github issue or discussion. These are expected to be simple changes without much controversy.

The RFC process comes into play when a particular change becomes large, introduces new features, or breaks existing interfaces. In these scenarios, the developer is expected to follow the RFC process outlined below to ensure not only proper involvement from maintainers, but also from the community as a whole. The goal is to reach a community consensus before introducing large changes as described above.

As a developer, you should use your best judgement to determine if an RFC is required or not. Maintainers have the right to ask that an RFC is created for a submitted pull-request, or an RFC is converted directly to a pull-request, depending on the proposal.

The complete RFC process is:

- Create a new branch for your RFC.

- Copy the template from

docs/src/rfc/template.mdIto a new file in thedocs/src/rfc/textdirectory named0000-<feature-name>.mdwhere0000is a placeholder until the RFC is accepted (so use0000in your PR) and<feature-name>is a short name for the feature. - Fill out the RFC template with your proposal.

- Submit a pull request (PR) with your RFC.

- The PR will be discussed, reviewed, and may be iteratively updated.

- Once there is consensus, one of the following will occur:

- If approved, the RFC will be merged and assigned an official number.

- If rejected, the RFC will be merged to the rejected directory and assigned an official number.

The RFC Life Cycle

Each RFC goes through these stages:

- Draft: The initial state when a PR is opened. The community and relevant teams provide feedback.

- Final Comment Period (FCP): Once there is rough consensus, an FCP of 7–10 days starts. During this time, final objections can be raised.

- Merged: After FCP with no blocking concerns, the RFC is merged and becomes official.

- Postponed: RFCs may be deferred due to lack of clarity, priority, or readiness.

- Rejected: With strong reasoning and community consensus, RFCs can be declined.

---

config:

layout: elk

look: handDrawn

---

graph TD;

Draft --> FCP --> Merged;

Draft --> Postponed;

Draft --> Rejected;

Implementing and Maintaining an RFC

Once accepted:

- The implementation is tracked through linked issues or repositories.

- Any changes during implementation that deviate from the RFC must go through a follow-up RFC or an amendment process.

- An RFC can be revised in-place via a new RFC that supersedes or modifies the previous one.

Rejected RFCs

Due to community feedback, some RFCs may be rejected. In order to track these and be able to reference back to them,

these RFCs are maintained in the Patina repo as files in docs/src/rfc/rejected. Each merged RFC in Patina will have

a unique number to reference it by, whether it was merged to the text (approved) or rejected directories; that is

RFC numbers shall be unique in Patina regardless of whether the RFC was approved or rejected.

Rejected RFCs must contain a new section that summarizes the community's decision to reject the RFC. The PR remains the source of the full community discussion.

Following the rejection of an RFC, that RFC may be raised to the community again at some point in the future. In this case, a new RFC should be created that points back to the original RFC and explains what is different about the new proposal or why the original proposal is appropriate to bring back to the community.

Code Organization

Patina contains many constituent parts. This document describes the organization of the overall codebase, including the key dependencies that are shared between the Patina DXE Core and other components. The goal is to provide a high-level overview of these relationships.

---

title: High-Level Repo Layout

displayMode: compact

config:

layout: elk

look: handDrawn

gantt:

useWidth: 400

compact: true

---

graph TD

A[Patina Repository] --> B[**core** - Core-specific functionality]

A --> C[**sdk** - Software Development Kit]

A --> D[**components** - Feature Components]

A --> E[**patina_dxe_core** - DXE Core Library]

B --> G[Debugger]

B --> H[Section Extractor]

B --> I[Stacktrace]

C --> J[**patina** - SDK library]

J --> K[Common Types and Definitions]

J --> L[Boot Services]

J --> M[Runtime Services]

J --> N[Component Infrastructure]

J --> O[Logging and Serial Support]

D --> P[Advanced Logger]

D --> Q[Management Mode Support]

D --> R[Performance Measurement Support]

D --> S[Component Samples]

E --> T[Examples and Core Functionality]

This is meant to be a living document, and as the codebase evolves, this document should be updated to reflect the current state.

General Principles

As we build the elements necessary for a functional UEFI firmware, many supporting systems must necessarily be created along the way. In the end, a set of largely independent software entities are integrated to ultimately fulfill the dependencies necessary for functional firmware. The fact that code was conceived to support UEFI firmware does not always mean it is intrinsically coupled with UEFI firmware.

The principles described here are not meant to be more detailed or complex than necessary. Their goal is to support the organization of Rust code developed in this project in a consistent manner. It is not a goal to describe anything beyond what is necessary to organize the code per the guidelines given.

Code Cohesion and Coupling

Cohesion is a popular software concept described in many places including Wikipedia.

When speaking of cohesion generally, it is a degree to which the elements inside a software container belong together. In different languages and environments, what is a “container” might vary. But we know that containers with high cohesion are easier to understand, use, and maintain.

Coupling is another concept commonly covered including its own page on Wikipedia. This definition is taken directly from there:

"In software engineering, coupling is the degree of interdependence between software modules, a measure of how closely connected two routines or modules are, and the strength of the relationships between modules. Coupling is not binary but multi-dimensional."

Understanding coupling is easy. Minimizing coupling in practice is hard. Coupling is a key driver of technical debt in code. Having unrelated subsystems tightly coupled will only worsen over time. Developers will use the smallest amount of coupling as a precedent for further coupling, creating a self-perpetuating cycle. This is a major factor in poor software design over time. Tight coupling results in:

- Systems that are difficult to understand and maintain – If you want to understand how one thing works that is coupled, you must now understand how everything else coupled to it works. They are coupled into one system.

- Systems that are difficult to refactor – To refactor coupled systems, you must refactor all coupled systems.

- Systems that are difficult to version – If something is changed in a multi-coupled system, the whole system’s version is revised and published. That does not reflect the actual degree of change in the individual elements of the system.

- Systems that are more difficult to test – A coupled system requires testing layers, dependencies, and interfaces irrelevant the interface initially being tested.

This essentially forms "spaghetti code". Spaghetti code is relatively easy to identify in existing code. Spaghetti code often begins because of a slip in coupling in one part of the system that starts the cycle. In the end, no one is quite sure who is responsible for the spaghetti code and how it got that way, but it did. Now it's a huge mess to clean up and many parts of the system must be impacted to do so. Depending on the complexity of the system, now tests, documentation, repo organization, and public APIs all must change. This is the "ripple" effect coupling has where the ripple grows larger based on the degree of coupling and size of the system.

Code in the Patina DXE Core should strive to achieve high cohesion and low coupling in the various layers of "containers". This results in higher quality software.

SOLID

Certain SOLID principles apply more broadly outside of pure object-oriented design than others.

Single Responsibility Principle

The single responsibility principle applies in many situations. When designing a set of code, we should ask, “What is the responsibility of this code? Does it make sense that someone here for one responsibility cares about the other responsibilities?”

Given that we are thinking about responsibility more broadly than individual classes, we will take on multiple responsibilities at a certain level. For example, a trait might focus on a single responsibility for its interface but a module that contains that trait might not. It is not so important to literally apply a single responsibility to each layer of code when thinking about organization, but it is helpful to consider responsibilities and how they relate to the overall cohesion and coupling of what is being defined.

Interface Segregation

Another SOLID principle that has utility outside designing classes is the interface segregation principle, which states that “no code should be forced to depend on methods it does not use”. That exact definition applies more precisely to granular interfaces like traits, but the idea is useful to consider in the larger composition of software as well as it affects the cohesion and coupling of components. We should try to reduce the extraneous detail and functionality in code, when possible, to make the code more portable, testable, and maintainable.

Organizational Elements: Crates and Modules

A package is a bundle of one or more crates. A crate is the smallest amount of code the Rust compiler considers at a time. Code is organized in crates with modules. All of these serve a purpose and must be considered.

For example, modules allow similar code to be grouped, control the visibility of code, and the path of items in the module hierarchy. Crates support code reuse across projects – ours and others. Crates can be independently versioned. Crates are published as standalone entities to crates.io. Crates allow us to clearly see external dependencies for the code in the crate. Packages can be used to build multiple crates in a repo where that makes sense like a library crate that is available outside the project but also used to build a binary in the package.

When we think about code organization at a high-level, we generally think about crates because those are the units of reusability across projects. That’s the level where we can clearly see what functionality is being produced and consumed by a specific set of code. Modules can fall into place within crates as needed.

Therefore, it is recommended to think about organization at the crate level.

- What is the cohesion of the code within this reusable unit of software?

- If a project depends upon this crate for one interface it exposes, is it likely that project will need the other interfaces?

- Are the external dependencies (i.e. crate dependencies, feature dependencies) of this crate appropriate for its purpose?

- Is the crate easy to understand? Are the interfaces and their purpose well documented? If someone wants to understand the core purpose of this crate, is that easy? Is there unrelated content in the way?

Repo and Packages

All Patina code is placed into the patina repo unless it has been carefully designed to be independent of Patina and

serve a Rust audience beyond UEFI.

Code Organization Guidelines

These guidelines consider the placement and organization of code to support long-term maintenance and usability. In addition, the goal is to employ the software principles described in the previous section to publish crates to the wider community.

Note: These categories are an initial proposal based on code trends that have developed over time in our work and subject to change based on review.

- Core Specific Crate (

core)- Functionality exclusively used in core environment like DXE Core, MM Core, and PEI Core.

- Examples: DXE Core, Event infrastructure, GCD, memory allocator

- Components (

components)- Functionality for features provided using the Patina component model.

- Module Development (SDK) Crate (

sdk)- Functionality necessary to build UEFI modules.

- Can be used by core or individual driver components.

- Examples:

- Boot Services & Runtime Services

- Device Path services

- Logging related (for modules to write to logs; not the adv logger protocol producer code for example)

- GUID services

- Performance services

- TPL services

- Functionality necessary to build UEFI modules.

If a more generic location for crates is needed, a misc directory made be created in the patina workspace.

This document does not intend to define exact mappings of current code to crates, that is out of scope. Its goal is to define the guidelines for managing crates.

Crate Dependencies

The matrix below shows allowed dependencies for each class of crate defined in the previous section.

| Core | SDK | Components | Feature | Generic | |

|---|---|---|---|---|---|

| Core | x | Y | N | N | Y |

| SDK | N | x | N | N | Y |

| Components | N | Y | x | N | Y |

Key: Y = Allowed, N = Not Allowed, x = Self-dependencies within category

Separating out generic code is beneficial because it allows the code to be reused in the greatest number of places including outside the UEFI environment in host unit tests.

Patina DXE Core Memory Strategy

Memory Safety in Rust-Based Firmware

Executive Summary

Patina provides a memory-safe Rust UEFI development model that eliminates entire classes of memory safety vulnerabilities present in traditional C-based (e.g. EDK II) firmware. This document focuses specifically on Rust's memory safety benefits and capabilities that provide tangible security improvements for firmware development.

This document explains:

- Why memory safety is a critical challenge in current C-based UEFI firmware

- How Rust's memory safety features and Patina's architecture address these challenges

- Why the Patina DXE Core implementation provides the most immediate security impact

If you are trying to understand why a programming language matters for firmware security, this document is for you.

Document Structure

- Problem: Memory safety challenges in current C-based UEFI firmware

- Solution: Rust's memory safety advantages and guarantees

- Implementation Prioritization: Why the DXE Core provides maximum memory safety impact

1. The Problem: Memory Safety Challenges in C Firmware

Traditional firmware development in C suffers from systemic memory safety issues that constantly present the opportunity for security vulnerabilities. For example, global tables of opaque function pointers are common in C firmware. The specific issues with that pattern are described further below.

Global Function Pointer Vulnerabilities

Traditional EDK II firmware relies heavily on global tables of function pointers, such as:

// Boot Services Table - Global function pointers

typedef struct {

EFI_ALLOCATE_POOL AllocatePool;

EFI_FREE_POOL FreePool;

// ... dozens more function pointers

} EFI_BOOT_SERVICES;

extern EFI_BOOT_SERVICES *gBS; // Global pointer accessible everywhere

This leaves firmware vulnerable to several classes of memory safety problems:

- Pointer Corruption: Memory corruption can overwrite function pointers, potentially leading to arbitrary code execution

- No Type Safety: Function pointers can be cast to incompatible types, resulting in system instability

- Runtime Verification: No compile-time verification that function pointers point to valid functions

- Global Mutability: Global accessibility allows potential modification of critical function pointers

It is difficult for a platform owner to assert confidence that these global pointers are never corrupted or misused, especially when third-party drivers are loaded into the same address space. It has been observed that third-party drivers DO modify these global pointers. In that case, if a vulnerability is discovered in the driver that has patched the table, it can be exploited to compromise the entire firmware environment as firmware now calls into the vulnerability at a global-scale. In addition, third-party drivers may "fight" over these global pointers, leading to a situation where even their modification is overwritten by another driver.

This creates a fragile and insecure execution environment.

Does memory safety really matter? Where's the evidence?

For a more detailed analysis of real UEFI security vulnerabilities that would be prevented by Rust's memory safety features, see UEFI Memory Safety Case Studies.

The UEFI (EDK II) Separate Binary Model

In this model, each driver is compiled into a separate PE/COFF binary:

Platform.dsc defines drivers to build:

MyDriverA/MyDriverA.inf -> MyDriverA.efi (separate binary)

MyDriverB/MyDriverB.inf -> MyDriverB.efi (separate binary)

Platform.fdf packages binaries into flash images:

FV_MAIN {

INF MyDriverA/MyDriverA.inf

INF MyDriverB/MyDriverB.inf

}

Limitations of Separate Binaries:

- Compilation Isolation: Each driver compiles independently with no visibility into other drivers.

- Separate Address Spaces: Each driver has isolated memory spaces with potential for ABI mismatches.

- Opaque Memory Origination: It is difficult or impossible to trace memory ownership and lifetimes across binaries. Pointers have to be "trusted" to point to the correct objects of the correct size in the correct location.

- Limited Optimization: No cross-driver optimization possible.

2. Solution: Rust Memory Safety with Patina

Rust's Memory Safety Advantages

The Borrow Checker: Compile-Time Memory Safety Analysis

Rust's borrow checker is a sophisticated static analysis system that prevents memory safety violations at compile time—before code ever executes. Unlike C, where memory safety bugs like use-after-free, double-free, and buffer overflows can lurk undetected until runtime (often in production systems), Rust's borrow checker enforces three fundamental rules that firmware developers must write code to comply with:

- Ownership: Every value has exactly one owner at any time

- Borrowing: References must always be valid for their entire lifetime

- Mutability: Data can be accessed immutably by many or mutably by one, but never both simultaneously

This means:

- No use-after-free errors: The borrow checker ensures references cannot outlive the data they point to

- No double-free errors: Ownership tracking prevents the same memory from being freed multiple times

- No data races: Mutability rules prevent concurrent access violations that could corrupt critical firmware state

- No buffer overflows: Rust's array bounds checking and safe abstractions eliminate this entire vulnerability class

This is done at compile time, so there is no runtime performance cost. In Rust (and Patina), developers write code that is guaranteed to be memory safe by the compiler.

Patina Services vs. Global Function Pointers

Patina implements a trait-based service system to replace global function pointers:

// Rust service definition with compile-time safety

trait MemoryService {

fn allocate_pool(&self, pool_type: MemoryType, size: usize) -> Result<*mut u8, MemoryError>;

fn free_pool(&self, buffer: *mut u8) -> Result<(), MemoryError>;

}

// Services are dependency-injected, not globally accessible

fn component_entry(memory: Service<dyn MemoryService>) -> Result<(), ComponentError> {

// Compiler verifies this service exists and has the correct interface

let buffer = memory.allocate_pool(MemoryType::Boot, 1024)?;

// ...

}This provides:

- Compile-Time Verification: The type system ensures services implement required interfaces correctly

- Controlled Access: Services are dependency-injected rather than globally mutable

- Interface Safety: Traits ensure all implementations provide consistent, type-safe interfaces

Patina's Monolithic Compilation Model

Patina compiles all components into a single binary:

#![allow(unused)] fn main() { extern crate patina_dxe_core; extern crate patina; extern crate patina_ffs_extractors; use patina::component::component; use patina_ffs_extractors::LzmaSectionExtractor; // Note: Begin mock types for compilation #[derive(Default)] struct PlatformConfig { secure_boot: bool, } pub struct MemoryManagerExampleComponent; #[component] impl MemoryManagerExampleComponent { fn new() -> Self { MemoryManagerExampleComponent } fn entry_point(self) -> patina::error::Result<()> { Ok(()) } } pub struct SecurityPolicyExampleComponent; #[component] impl SecurityPolicyExampleComponent { fn new() -> Self { SecurityPolicyExampleComponent } fn entry_point(self) -> patina::error::Result<()> { Ok(()) } } pub struct DeviceDriverExampleComponent; #[component] impl DeviceDriverExampleComponent { fn new() -> Self { DeviceDriverExampleComponent } fn entry_point(self) -> patina::error::Result<()> { Ok(()) } } // Note: End mock types for compilation use patina_dxe_core::*; struct ExamplePlatform; impl ComponentInfo for ExamplePlatform { fn configs(mut add: Add<Config>) { add.config(PlatformConfig { secure_boot: true }); } fn components(mut add: Add<Component>) { add.component(MemoryManagerExampleComponent::new()); add.component(SecurityPolicyExampleComponent::new()); add.component(DeviceDriverExampleComponent::new()); } } // No default implementation overrides impl MemoryInfo for ExamplePlatform { } impl CpuInfo for ExamplePlatform { } impl PlatformInfo for ExamplePlatform { type MemoryInfo = Self; type CpuInfo = Self; type ComponentInfo = Self; type Extractor = LzmaSectionExtractor; } static CORE: Core<ExamplePlatform> = Core::new(LzmaSectionExtractor::new()); }

Monolithic Compilation Benefits

- Cross-Module Optimization: The compiler can inline functions across component boundaries, eliminate dead code globally, and optimize data usage across the entire firmware image

- Whole-Program Analysis: Static analysis tools can reason about the complete control flow and data dependencies across all components, identifying potential issues that would be invisible when components are compiled separately

- Lifetime Verification: The borrow checker can verify that references between components remain valid throughout the entire firmware execution lifecycle, preventing inter-component memory safety violations

3. Implementation Prioritization: Why the DXE Core First?

DXE Core Role in UEFI Architecture

The Driver Execution Environment (DXE) Core:

- Contains more code than any other phase of UEFI firmware

- Has complex interations with third-party drivers

- Has the most consistently initialized hardware state upon entry of any execution phase across platforms

- Because pre-DXE firmware has already initialized basic SOC functionality, the DXE Core can have a common expectation that basic hardware capabilities such as main memory and APs are initialized.

This makes it the ideal first target to improve memory safety in UEFI firmware while maximizing portability of the work across platforms and vendors.

In addition, the DXE Core implements and manages critical system services that are heavily used by all subsequent drivers and components, including:

- Driver Dispatch: Loading and executing DXE drivers and securing the execution environment of those drivers

- Event Management: Coordinating system-wide events and callbacks critical to firmware correctness

- Memory Management: Managing memory allocation, memory protections, and the memory map

- Protocol Management: Managing the global protocol database

- Service Table Management & Functionality: Providing the fundamental Boot Services and Runtime Services that all other firmware components depend upon

Service Call Coverage

Every UEFI driver (including all C drivers used in a Patina DXE Core boot) make hundreds, thousands, even millions of calls to Boot Services and Runtime Services during system boot. By securing the DXE Core in Rust, these core services now reside in a Pure Rust call stack with all key operations such as memory allocations maintained entirely in safe Rust code. In short, this offers the most effective way to immediately take advantage of Rust's reliability across the lifetime of the boot phase with the least amount of effort since one component (the core) is written in Rust benefiting hundreds of components (remaining in C) with no changes in those components.

The following table demonstrates the implementation status and call frequency of key UEFI services in the Patina DXE Core, measured during QEMU X64 boot. This shows how frequently this critical code paths are executed during a typical boot, and how many of these services are now implemented in memory-safe Rust:

| Type | Service | Implemented in Pure Rust | Call Count (QEMU X64) |

|---|---|---|---|

| Driver Support | ConnectController() | Yes | 517 |

| DisconnectController() | Yes | 0 | |

| Event | CheckEvent() | Yes | 27,347 |

| CloseEvent() | Yes | 2,082 | |

| CreateEvent() | Yes | 2,153 | |

| CreateEventEx() | Yes | (combined with CreateEvent()) | |

| SetTimer() | No (Depends on Timer Arch Protocol) | 4,063 | |

| SignalEvent() | Yes | 230,045 | |

| WaitForEvent() | Yes | 0 | |

| Image | Exit() | Yes | 133 |

| LoadImage() | Yes | 132 | |

| StartImage() | Yes | 133 | |

| UnloadImage() | Yes | 0 | |

| Memory | AllocatePages() | Yes | 1,127 |

| AllocatePool() | Yes | 19,696 | |

| CopyMem() | Yes | Not Measured | |

| FreePages() | Yes | 801 | |

| FreePool() | Yes | 14,763 | |

| GetMemoryMap() | Yes | 46 | |

| SetMem() | Yes | Not Measured | |

| Miscellaneous | CalculateCrc32() | Yes | 440 |

| ExitBootServices() | Yes | 2 | |

| InstallConfigurationTable() | Yes | 44 | |

| Protocol | CloseProtocol() | Yes | 544 |

| HandleProtocol() | Yes | 25,915 | |

| InstallMultipleProtocolInterfaces() | Yes | 0 | |

| InstallProtocolInterface() | Yes | 552 | |

| LocateDevicePath() | Yes | 646 | |

| LocateHandle() | Yes | 0 | |

| LocateHandleBuffer() | Yes | 0 | |

| LocateProtocol() | Yes | 53,480 | |

| OpenProtocol() | Yes | 54,803 | |

| OpenProtocolInformation() | Yes | 810 | |

| ProtocolsPerHandle() | Yes | 373 | |

| RegisterProtocolNotify() | Yes | 65 | |

| ReinstallProtocolInterface() | Yes | 133 | |

| UninstallMultipleProtocolInterfaces() | Yes | 0 | |

| UninstallProtocolInterface() | Yes | 10 | |

| Task Priority | RaiseTPL() | Yes | 1,181,652 |

| RestoreTPL() | Yes | 1,181,524 | |

| Timer | GetNextMonotonicCount() | No (Depends on Monotonic Arch Protocol) | Not Measured |

| SetWatchdogTimer() | No (Depends on Watchdog Arch Protocol) | 5 | |

| Stall() | No (Depends on Metronome Arch Protocol) | 502 |

Conclusion

The Patina DXE Core's monolithic Rust compilation strategy allows the firmware to maximize the benefit of Rust's memory safety guarantees at compile time. This prevents memory safety vulnerabilities from ever being introduced in the first place, rather than relying on reactive vulnerability patching after the fact. In C, a myriad of static analysis tools are run against the codebase to try to identify potential memory safety issues, but these tools can only find a subset of issues and often generate false positives. That is not necessary in Safe Rust.

Key Benefits Summary

- Comprehensive Static Analysis: Monolithic compilation enables verification across all firmware components

- Immediate Security Impact: The Patina DXE Core strategy protects the most frequently executed firmware code paths

- Strategic Migration Path: Gradual transition from C drivers to Rust components preserves existing investments

- Vulnerability Elimination: Entire classes of memory safety vulnerabilities are prevented by design rather than addressed reactively

Rust Tooling in Patina

Overview

The Patina project relies on Rust's tooling ecosystem to maintain consistent quality, security, and reliability for UEFI firmware development. This document summarizes the tooling in use, highlights how it is integrated into Patina's workflow, and contrasts the approach with common practices in C-based firmware projects where relevant.

For first-time rust tooling setup instructions, please review the project's README.

Summary Table

The table below shows the comprehensive tool suite used in Patina compared to traditional C firmware development tools:

| Tool Category | Patina (Rust) Tool | Traditional C Tool | Key Advantages |

|---|---|---|---|

| Benchmarking | cargo bench | Custom timing code | Statistical analysis, regression detection |

| Build System | cargo-make | GNU Make, CMake | Cross-platform, declarative |

| Code Formatting | rustfmt | Uncrustify, ClangFormat | Built-in, consistent, configurable |

| Documentation | rustdoc | Doxygen | Code-synchronized, testable examples |

| Security & License Auditing | cargo-deny | Manual tracking and spreadsheets | Automated vulnerability and license policy enforcement |

| Spell Checking | cspell | Manual proofreading | Automated technical dictionary |

| Static Analysis | clippy | PC-lint, PVS-Studio, Coverity | Compiler-integrated, zero-config |

| Supply Chain Auditing | cargo vet | Manual review processes | Web-of-trust based dependency auditing |

| Test Coverage | cargo-llvm-cov | gcov, OpenCppCoverage | Integrated coverage collection |

| Undefined Behavior Analysis | cargo miri | Valgrind, UBSan | Catches memory safety issues in unsafe code |

The cargo Ecosystem: Central Command Hub

Tooling Strategy

Patina prioritizes mature, off-the-shelf tooling to avoid maintaining bespoke infrastructure. Experience with other firmware projects shows that custom build systems and analyzers introduce long-term maintenance risk and complicates onboarding. Using standard Rust tooling keeps Patina aligned with the broader ecosystem and allows the Patina team to focus on firmware functionality.

cargo is the common entry point for these tools. It offers a consistent interface for building, testing, and extending the workflow without constraining developers to project-specific wrappers. Cargo provides a standardized interface that works identically across host environments (Windows, Linux, macOS) and is the central command used to orchestrate all development tasks.

cargo

Purpose: Cargo is Rust's integrated package manager and build system that serves as the central command hub for the entire development workflow, from dependency management to tool management.

Why a "Central Commans" is valuable:

- Universal Interface: Single command entry point for all development tasks (

cargo make build,cargo make test,cargo make doc, etc.) - Plugin Architecture: Extensible through cargo subcommands that integrate seamlessly with existing workflows

- Tool Discovery: Automatic discovery and integration of third-party tools

- Workspace Management: Unified management of multi-crate projects with shared configurations

Tool Installation and Management

Cargo also handles tool distribution. Patina supports two installation strategies, depending on whether a build from source is required or a pre-built binary is acceptable. In most cases, Patina uses pre-built binaries to minimize setup time.

Source-based Installation:

This will compile tools from source, which can take several minutes per tool. It is particularly useful if the user needs the tool to be built against their exact environment (e.g. rustc version, custom patches, etc.).

# Install tools from source with full compilation

cargo install cargo-make # Build orchestration

cargo install cargo-deny # Security and license auditing

cargo install cargo-llvm-cov # Coverage analysis

Binary Installation with cargo-binstall:

The cargo-binstall helper downloads pre-built artifacts when they are

available:

# Install cargo-binstall itself

cargo install cargo-binstall

# Install tools as pre-compiled binaries (much faster)

cargo binstall cargo-make

cargo binstall cargo-deny

cargo binstall cargo-llvm-cov

It is recommended to use cargo-binstall when possible to minimize setup time.

Tool Discovery and Version Management

cargo-binstall streamlines discovery and version control for external tooling by downloading pre-built binaries when

available.

Automatic Release Discovery and Selection:

- Scans GitHub releases for compatible binaries

- Detects the target architecture/platform automatically

- Downloads the appropriate binary for the current system

Version Management:

- Tracks semantic versioning of installed tools

- Provides an easy upgrade path for tool updates

- Allows specific versions of tools to be installed as needed

Security and Verification:

- Verifies checksums and signatures when available

- Downloads from trusted sources (GitHub releases)

Integration with Development Workflow

The tools available with cargo make development simple and consistent across all Patina repos:

# Standard cargo commands work universally

cargo make check # Fast compilation check

cargo make build # Full compilation

cargo make test # Run test suite

cargo make patina-test # Build with Patina test features enabled for on-platform tests

cargo make doc # Generate documentation

cargo make cov # Generate unit test code coverage

cargo make deny # Check for security and license compliance in dependencies

cargo make all # Run the same commands used in CI/CD

Developers can run targeted commands based on the changes they've made and then run the full suite (cargo make all)

when they are finished to test against the same commands that will be used in CI. This allows for quick feedback during

development without waiting for the full suite to complete.

Traditional C Firmware Tools

In a traditional C UEFI workspace, there is often developer confusion about how to build, what is supported in a given workspace and/or firmware package, and which tools to use for various tasks. In Patina, these commands are standardized, documented, and work identically across all supported platforms.

Benefits of Standard Tooling

Patina's use of community tooling has several benefits:

- Bug fixes and improvements benefit the entire ecosystem

- Easy onboarding and skill set transfer between Rust projects

- No time investment needed from firmware developers to maintain custom tools

- No licensing fees for proprietary analysis tools

- No vendor lock-in to elaborate project-specific tooling

- Security updates provided by tool maintainers

- Tools evolve with the language and best practices

These practices let Patina developers concentrate on firmware behavior instead of maintaining custom infrastructure.

Rust Tools

cargo bench (Performance Benchmarking)

Purpose: Statistical benchmarking framework for performance regression detection and optimization validation.

Value:

- Integration: Works with existing test infrastructure

- Micro-benchmarks: Fine-grained performance measurement

- Regression Detection: Automated performance regression alerts

- Statistical Analysis: Multiple iterations with outlier detection

Provides statistically rigorous benchmarking:

#![allow(unused)] fn main() { #[cfg(test)] mod benches { use super::*; use test::Bencher; // Built-in benchmarking framework #[bench] fn bench_process_buffer(b: &mut Bencher) { let mut buffer = vec![0u8; 1024]; b.iter(|| { // Automatically runs multiple iterations with statistical analysis process_buffer(&mut buffer); }); } } }

Configuration in Patina:

# Makefile.toml - Benchmarking support

[tasks.bench]

command = "cargo"

args = ["bench", "@@split(CARGO_MAKE_TASK_ARGS,;)"]

cargo-make (Advanced Build Orchestration)

Purpose: Cross-platform task runner that abstracts complex build tasks into simple commands.

Value:

- Conditional Execution: Tasks execute based on environment, features, or dependencies

- Cross-Platform Scripts: Single configuration works on Windows, Linux, macOS

- Customization: Ability to establish dependencies between tasks and add custom script logic

cargo-make provides declarative, cross-platform task definition. It is

the key extension to cargo that enables Patina to define complex workflows in a single configuration file and make

build commands consistent across all platforms and Patina repositories.

# Makefile.toml - works identically on all platforms

[tasks.check]

description = "Comprehensive code quality checks"

run_task = [

{ name = ["check_no_std", "check_std", "check_tests"], parallel = true }

]

[tasks.all]

description = "Complete PR readiness pipeline"

dependencies = [

"deny", "clippy", "build", "build-x64", "build-aarch64",

"test", "coverage", "fmt", "doc"

]

Configuration in Patina:

# Multi-target build support

[tasks.build-x64]

command = "cargo"

args = ["build", "--target", "x86_64-unknown-uefi", "@@split(NO_STD_FLAGS, )"]

[tasks.build-aarch64]

command = "cargo"

args = ["build", "--target", "aarch64-unknown-uefi", "@@split(NO_STD_FLAGS, )"]

The Patina cargo-make makefile: Makefile.toml

rustfmt (Code Formatting)

Purpose: Automatic code formatting tool that enforces consistent style across the entire codebase.

Value:

- Easy & Documented Configuration: Simple

rustfmt.tomlfile for project-wide rules - Editor Integration: Real-time formatting in editors like VS Code, IntelliJ, Vim, Emacs

- Deterministic Output: Code is always formatted identically

- Incremental Formatting: Only formats changed code for faster execution

- Zero Configuration: Works out-of-the-box with sensible defaults

Comparison to C Development:

Traditional C firmware projects have struggled with formatting consistency and formatting feedback consumes valuable code review time and energy. Some projects have adopted tools like Uncrustify, but they require extensive configuration and customization and are not used consistently across all firmware repositories. This means when a platform integrates firmware for multiple repositories, their codebase is often formatted inconsistently.

Patina uses the standard rustfmt tool to produce consistently formatted code.

Patina configuration is defined in rustfmt.toml.

rustdoc (Documentation Generation)

Purpose: Built-in documentation generator that creates interactive, searchable documentation directly from source code and comments.

Value:

- Code Synchronization: Documentation is embedded in source code, preventing drift from code

- Cross-References: Automatic linking between types, functions, and modules

- Interactive Examples: Runnable code examples in the browser

- Testable Examples: Code examples in documentation are automatically tested

Comparison to C Development:

Traditional C documentation uses external tools like Doxygen, which often become outdated and is not synchronized with the codebase:

/**

Processes a buffer by zeroing all elements.

@param[in,out] Buffer Pointer to the buffer to process.

@param[in] BufferSize Size of the buffer in bytes.

@retval EFI_SUCCESS The buffer was processed successfully.

@retval EFI_INVALID_PARAMETER Buffer is NULL or BufferSize is 0.

**/

EFI_STATUS

ProcessBuffer (

IN OUT UINT8 *Buffer,

IN UINTN BufferSize

);

Rust documentation using rustdoc is embedded and testable:

#![allow(unused)] fn main() { /// Processes a buffer by zeroing all elements. /// /// # Arguments /// * `buffer` - Mutable slice to process /// /// # Examples /// ``` /// let mut buffer = vec![1, 2, 3, 4]; /// process_buffer(&mut buffer); /// assert_eq!(buffer, vec![0, 0, 0, 0]); // This example is automatically tested! /// ``` /// /// # Safety /// This function is memory-safe due to Rust's slice bounds checking. pub fn process_buffer(buffer: &mut [u8]) { for byte in buffer.iter_mut() { *byte = 0; } } }

Configuration in Patina:

# Makefile.toml - Documentation generation

[tasks.doc]

command = "cargo"

args = ["doc", "@@split(INDIVIDUAL_PACKAGE_TARGETS, )", "--features", "doc"]

[tasks.doc-open]

command = "cargo"

args = ["doc", "--features", "doc", "--open"]

# Environment variables for strict documentation

[env]

RUSTDOCFLAGS = "-D warnings -D missing_docs"

Note: Patina defines its documentation requirements in the Documentation Reference guide.

cargo-deny (Security and License Auditing)

Purpose: Comprehensive dependency auditing tool that checks for security vulnerabilities, license compliance, and dependency policy violations.

Value:

- Dependency Policy: Prevents supply chain attacks through dependency restrictions

- License Compliance: Ensures all dependencies meet licensing requirements

- SBOM Generation: Software Bill of Materials for regulatory compliance

- Vulnerability Database: Automatically checks against RustSec advisory database

cargo-deny is configured in Patina with a single configuration file

deny.toml is tracked and reviewed like any

other source code file in the codebase.

Sample cargo-deny Output:

error[A001]: Potential security vulnerability detected

┌─ Cargo.lock:123:1

│

123 │ openssl-sys v0.9.60

│ ------------------- security vulnerability ID: RUSTSEC-2023-0044

│

= advisory: https://rustsec.org/advisories/RUSTSEC-2023-0044

= Affected versions: < 0.9.61

= Patched version: >= 0.9.61

Configuration in Patina:

# deny.toml - Comprehensive security and compliance policy

[advisories]

ignore = [

{ id = "RUSTSEC-2024-0436", reason = "Macros for token pasting. No longer maintained per readme." }

]

[licenses]

allow = ["Apache-2.0", "BSD-2-Clause-Patent", "MIT"]

[bans]

deny = [

{ crate = "tiny-keccak", reason = "Not updated in 5 years. Use alternative." }

]

Key Documentation: cargo-deny Book

cargo vet (Supply Chain Auditing)

Purpose: Supply chain security tool that provides a web-of-trust based auditing system for Rust dependencies, to check that dependencies have been reviewed by trusted auditors.

Value:

- Audit Sharing: Leverages existing audits from Mozilla, Google, and other organizations to reduce audit burden

- Open Device Partnership shares audits across the organization in OpenDevicePartnership/rust-crate-audits

- Delta Auditing: Focuses audit effort on changes between versions rather than full re-audits

- Import Management: Allows importing audits from other projects and organizations

- Policy Enforcement: Requires explicit audits or exemptions for all dependencies

- Web of Trust: Builds on audits from trusted organizations and maintainers in the Rust ecosystem

Comparison to cargo-deny:

cargo vet provides a different approach to supply chain security compared to

cargo-deny. While cargo-deny focuses on known vulnerabilities and license compliance, cargo vet ensures that

dependencies have been reviewed by trusted auditors before they can be used and it tracks unaudited dependencies

so they can be prioritized for review.

cargo-deny is reactive - it flags known issues after they're discovered. cargo vet is proactive - it requires

confirmation that dependencies are safe before they can be used.

Usage in Patina:

cargo vet # Check all dependencies are audited

cargo vet check # Check without updating imports

cargo vet certify # Certify a new dependency

cargo vet add-exemption # Add temporary exemption for unaudited dependency

cargo vet prune # Remove unused audits

Key Documentation: cargo vet Book

cspell (Spell Checking)

Purpose: Spell checker that is applied against all technical documentation and source code with the ability to use programming-language specific and custom dictionaries.

Value:

- Automation: Automated spell checking in local and sever CI

- Custom Dictionaries: Supports project-specific terminology and abbreviations

- Multi-Language: Supports code comments in multiple programming languages

- Technical Dictionaries: Built-in support for programming terms, acronyms, and technical jargon including the import of third-party dictionaries

Configuration in Patina:

# cspell.yml example in Patina

language: en

dictionaries: ["makefile", "rust"]

ignorePaths: ["**/target/**", "**/book/**"]

ignoreRegExpList: ["/0x[0-9a-fA-F]+/", "asm!\\(.*?\\);"]

words:

- aarch # Architecture terms

- acpibase # ACPI terminology

- dxefv # UEFI-specific terms

- edkii # EDK II references

...

Further Documentation:

clippy (Static Analysis and Linting)

Purpose: Advanced static analysis tool that catches bugs, performance issues, and style problems beyond what the compiler detects.

Value:

- Compiler Integration: Leverages Rust's compiler infrastructure for deep semantic analysis

- Memory Safety: Additional checks beyond Rust's built-in memory safety guarantees

- Performance Insights: Identifies inefficient patterns specific to systems programming

- UEFI-Specific Lints: Custom lints can be added for firmware-specific patterns

Comparison to C Development:

Traditional C static analysis tools like Coverity and CodeQL are expensive, require extensive configuration, and often produce false positives:

// C code that passes basic compilation but has subtle bugs

void ProcessBuffer(UINT8* Buffer, UINTN Size) {

UINTN Index = 0;

while(Index <= Size) { // Off-by-one error - tools may miss this

Buffer[Index] = 0; // Potential buffer overflow

Index++;

}

}

Clippy provides analysis that detects and suggests design changes to improve the code:

#![allow(unused)] fn main() { // Clippy warns about potential issues fn process_buffer(buffer: &mut [u8]) { // Clippy suggests using an iterator instead of manual indexing for byte in buffer.iter_mut() { // Automatic bounds checking *byte = 0; } } }

Sample Clippy Output:

warning: this loop could be written as a `for` loop

--> src/lib.rs:10:5

|

10 | while index < buffer.len() {

| ^^^^^^^^^^^^^^^^^^^^^^^^^^^^ help: try: `for (index, item) in buffer.iter_mut().enumerate()`

|

= help: for further information visit https://rust-lang.github.io/rust-clippy/master/index.html#while_let_loop

Configuration in Patina:

# Makefile.toml clippy configuration

[tasks.clippy]

command = "cargo"

args = ["clippy", "--all-targets", "--all-features", "--", "-D", "warnings"]

Further Documentation:

cargo miri (Undefined Behavior Detection)

Purpose: Executes tests under the Miri interpreter to detect memory safety

issues resulting in undefined behavior (UB). This is particularly useful in unsafe code. For example, out-of-bounds

pointer arithmetic, use-after-free, invalid use of uninitialized data, alignment violations, etc.

In Patina, the goal is to write as little unsafe code as possible, but some low-level operations require it. Patina

then builds safe abstractions on top of that unsafe code that all other Rust code in Patina uses. Miri helps ensure

that this minimal unsafe layer of code is correct and that the safe abstractions built on top of it are valid.

Value:

- Early UB Detection: Catches issues such as use-after-free, data races in

Synctypes, and invalid pointer math. - No Extra Harness: Reuses existing unit tests, so validation focuses on expanding coverage rather than wiring up custom tooling.

- Targeted Verification: Developers can gate changes that introduce new

unsafecode on passing Miri runs to keep Patina's invariants explicit and documented. - Unsafe Code Validation: Exercises

unsafeblocks under an interpreter that checks aliasing, alignment, and initialization rules that the optimizer assumes are upheld.

Comparison to C Development:

Traditional firmware workflows rely on Valgrind or compiler sanitizers, which struggle with firmware-specific runtime constraints and often cannot model the exact aliasing rules required by Rust's unsafe code contracts:

Running the test with cargo miri test produces a detailed error describing the violated aliasing rule, letting the

developer either refactor to safe abstractions or document the invariants with additional assertions.

Usage in Patina:

cargo miri setup # Configure the toolchain the first time

cargo miri test -p patina_mm # Run interpreter-backed tests for a specific crate

Patina developers prioritize running Miri on modules that contain low-level memory manipulation, MMIO shims, or FFI

shims. Documenting why unsafe blocks remain valid after fixes helps future reviewers and keeps invariants explicit.

Further Documentation:

cargo-llvm-cov (Test Coverage Analysis)

Purpose: LLVM-based code coverage collection and reporting tool that integrates seamlessly with Rust's testing infrastructure.

Value:

- Integration: Works with standard

cargo testwithout additional setup - Multiple Formats: Supports LCOV, HTML, JSON output formats for CI/CD integration

- Precise Coverage: LLVM instrumentation provides accurate line and branch coverage

- Threshold Enforcement: Fails builds when coverage drops below specified levels

Code coverage in C firmware has traditionally been difficult to set up and maintain, often requiring custom scripts and limitations in the types of tests that can be measured and the host environments supported.

Rust code coverage with cargo-llvm-cov works automatically with existing tests:

#![allow(unused)] fn main() { // Automatic coverage collection with zero additional code #[cfg(test)] mod tests { use super::*; #[test] // Automatically included in coverage fn test_buffer_processing() { let mut buffer = vec![1, 2, 3, 4]; process_buffer(&mut buffer); assert_eq!(buffer, vec![0, 0, 0, 0]); } } }

Configuration in Patina:

Patina has several tasks defined in Makefile.toml to collect coverage data and generate reports in different formats:

cargo make coverage-collect: Runs tests and collects coverage data without generating reports. This allows multiple report formats to be generated from the same data efficiently.cargo make coverage-lcov: Generates an LCOV coverage report from the collected data.cargo make coverage-html: Generates an HTML coverage report from the collected data.

[tasks.coverage]

description = "Build and run all tests and calculate coverage (generates both LCOV and HTML outputs efficiently)."

dependencies = ["coverage-collect", "coverage-lcov", "coverage-html"]

Note: Patina previously used

tarpaulinfor coverage, but switched tocargo-llvm-covas it has proven to be more accurate using LLVM-based instrumentation.

Code Coverage During Development

A nice feature of code coverage in Rust is that it can integrate seamlessly with IDEs like VS Code. For example, the